转载自 成长之路丶关注@简书

目标:

此次爬取主要是针对IT桔子网的事件信息模块,然后把爬取的数据存储到mysql数据库中。

目标分析:

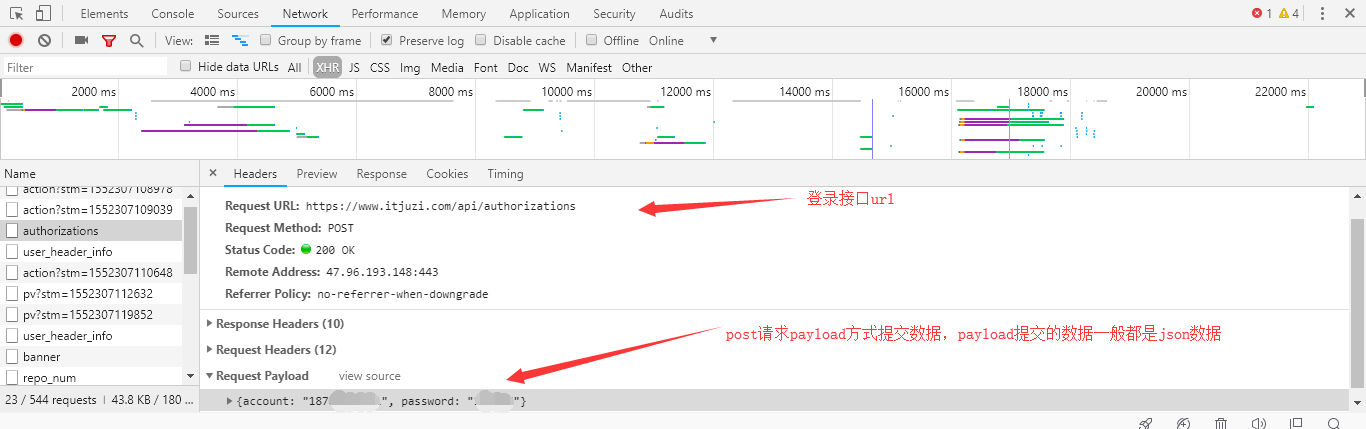

通过浏览器浏览发现事件模块需要登录才能访问,因此我们需要先登录,抓取登录接口:

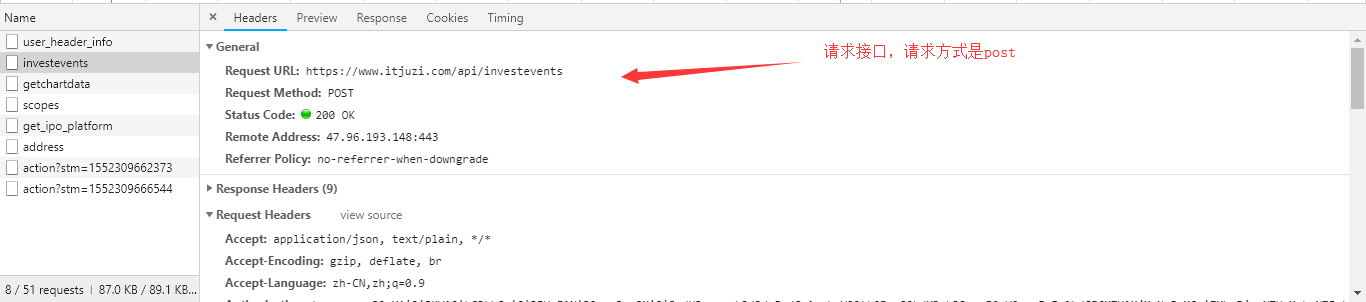

可以看到桔子网的登录接口是:https://www.itjuzi.com/api/authorizations,请求方式是post请求,数据的提交方式是payload,提交的数据格式是json(payload方式提交的数据一般都是json),再看一下响应:

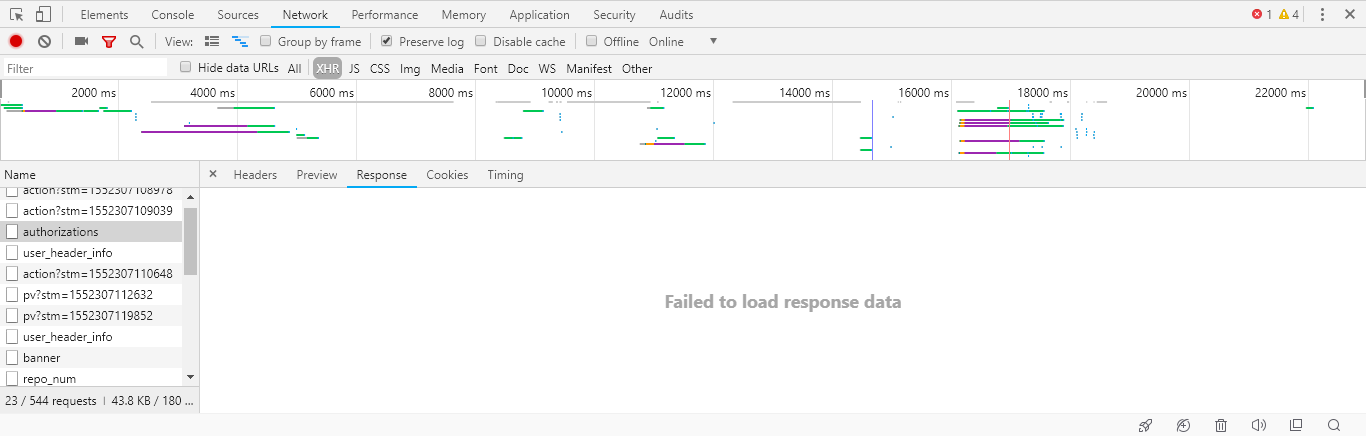

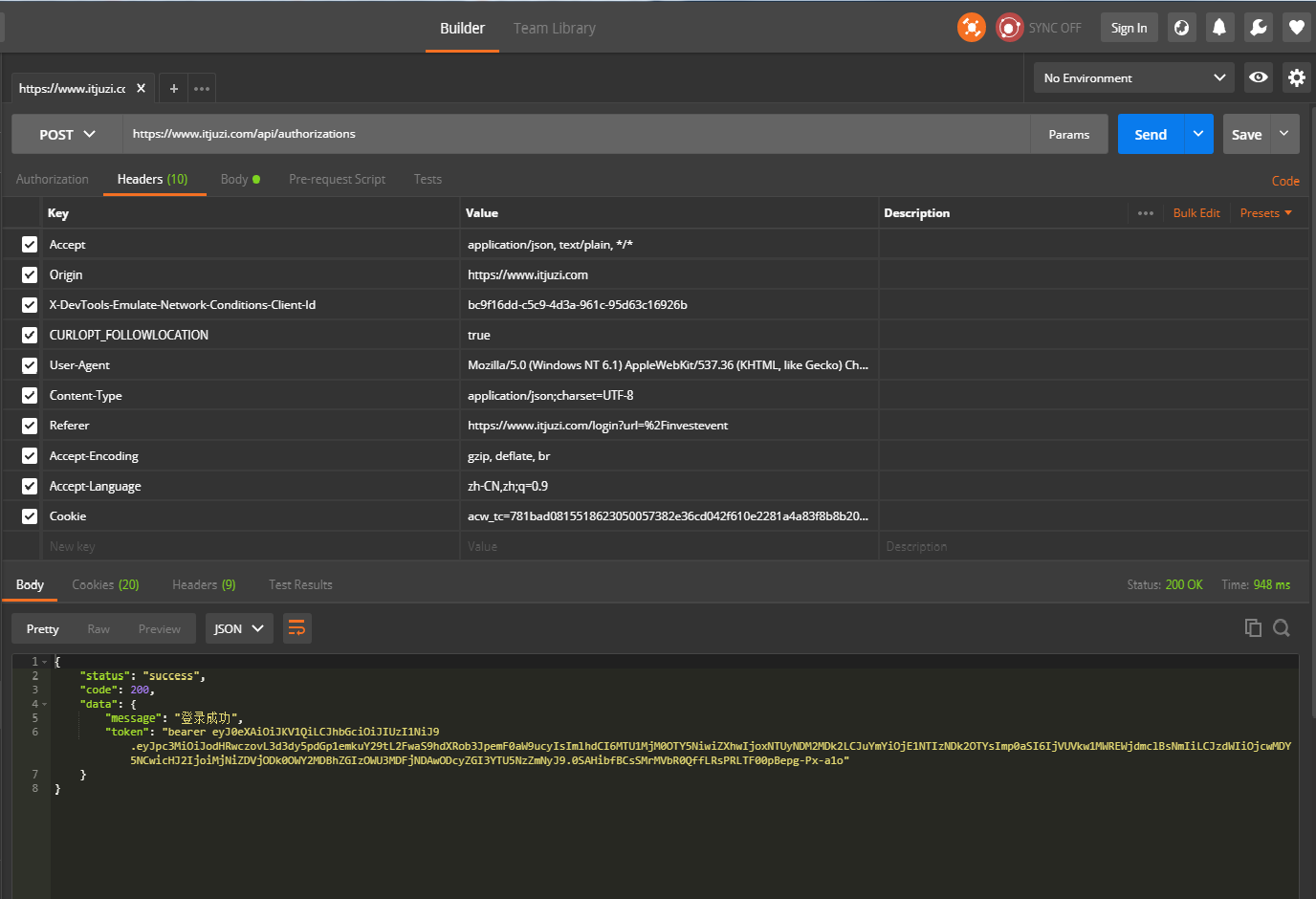

发现没有响应数据,其实是有响应数据的,只是F12调试看不到,我们可以用postman来查看响应体:

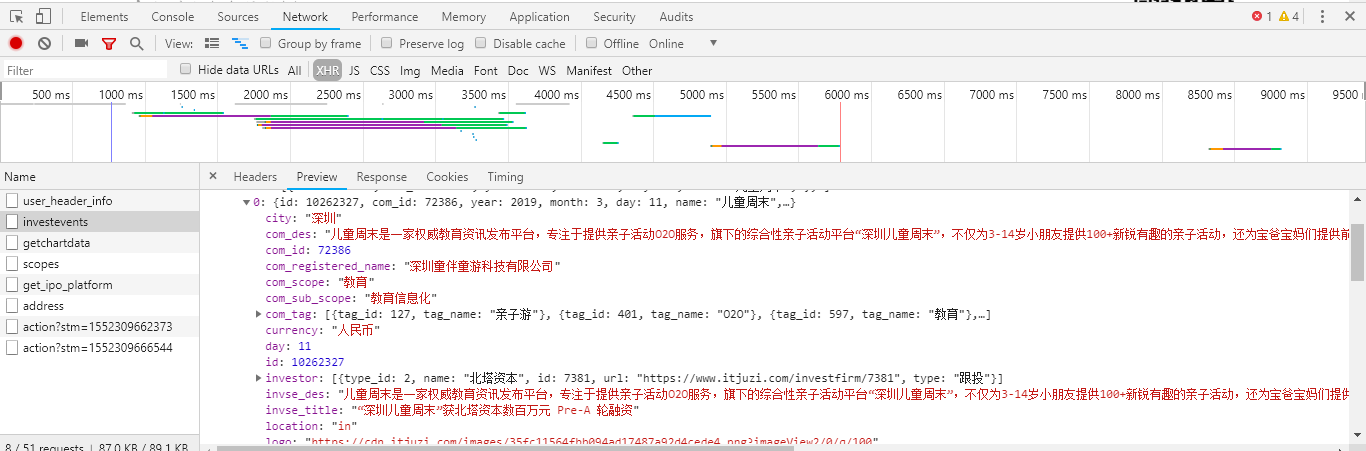

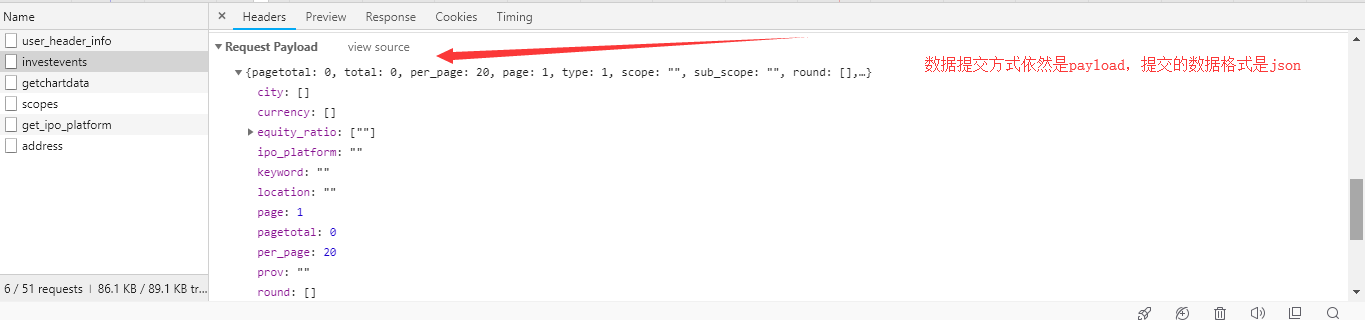

可以发现响应体是json数据,我们先把它放到一边,我们再来分析事件模块,通过F12抓包调试发现事件模块的数据其实是一个ajax请求得到的:

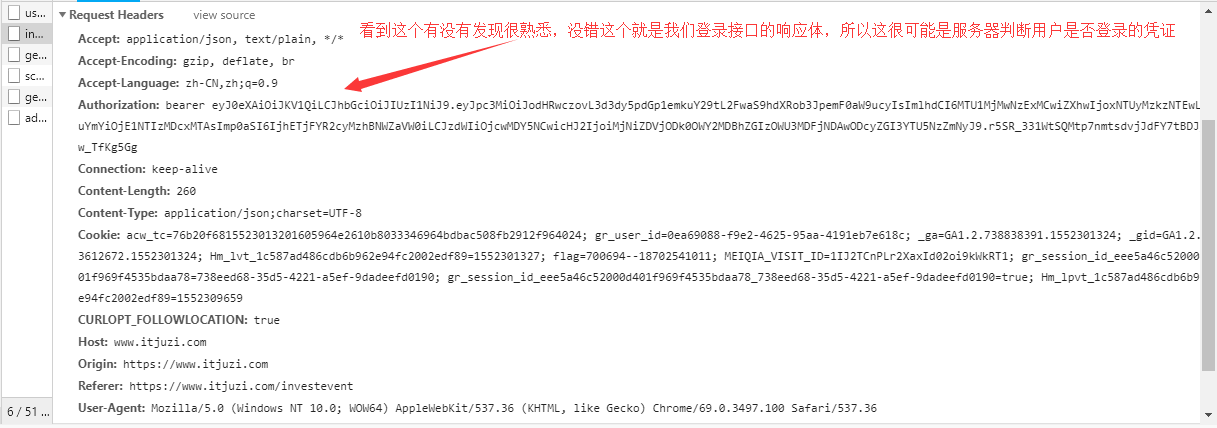

ajax请求得到的是json数据,我们再看看headers:

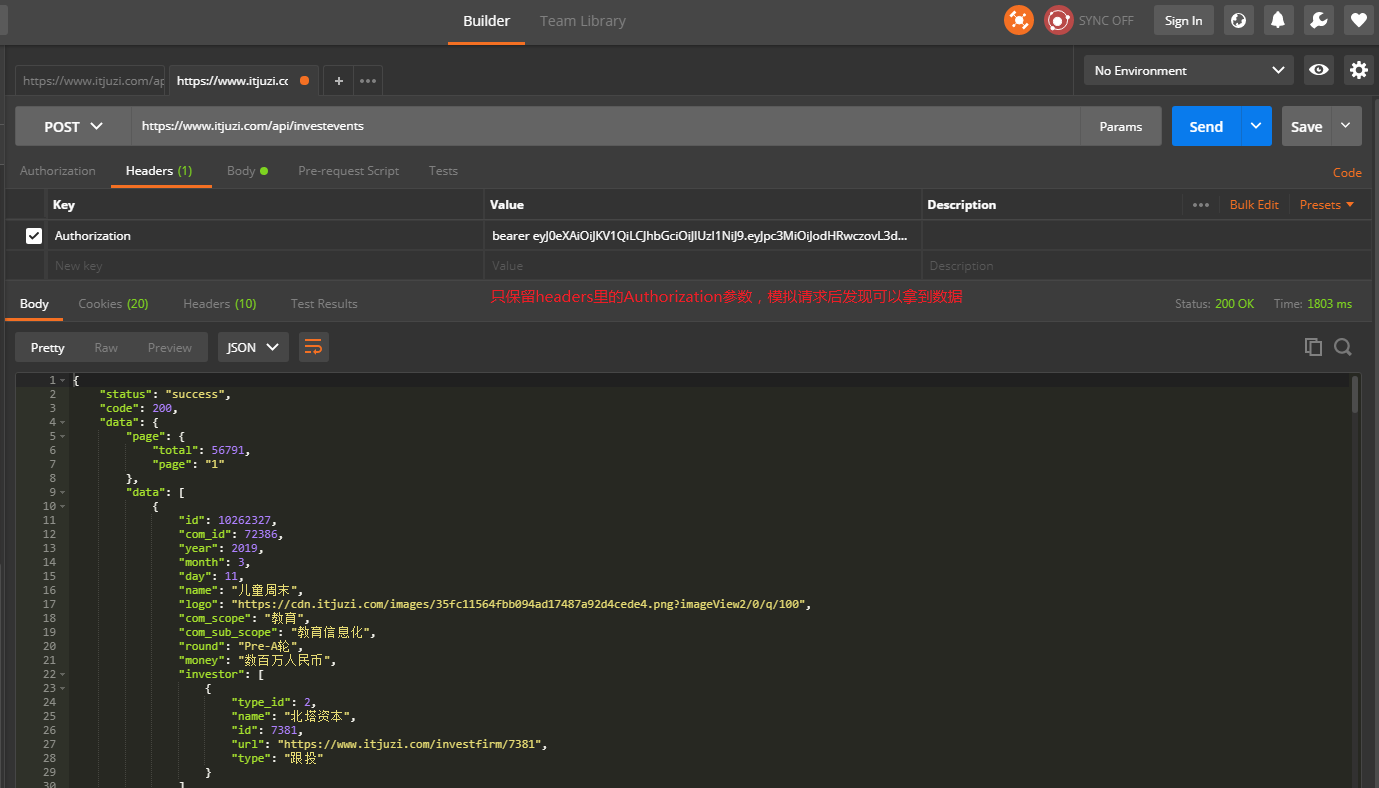

可以发现headers里有一个Authorization参数,参数的值恰好是我们登录时登录接口返回的json数据的token部分,所以这个参数很有可能是判断我们是否登录的凭证,我们可以用postman模拟请求一下:

通过postman的模拟请求发现如我们所料,我们只要在请求头里加上这个参数我们就可以获得对应的数据了。

解决了如何获得数据的问题,再来分析一下如何翻页,通过对比第一页和第二页提交的json数据可以发现几个关键参数,page、pagetotal、per_page分别代表当前请求页、记录总数、每页显示条数,因此根据pagetotal和per_page我们可以算出总的页数,到此整个项目分析结束,可以开始写程序了。

scrapy代码的编写

1.创建scrapy项目和爬虫:

-

E:\>scrapy startproject ITjuzi

-

E:\>scrapy genspider juzi itjuzi.com

2.编写items.py:

-

# -*- coding: utf-8 -*-

-

-

# Define here the models for your scraped items

-

#

-

# See documentation in:

-

# https://doc.scrapy.org/en/latest/topics/items.html

-

-

import scrapy

-

-

-

class ItjuziItem(scrapy.Item):

-

# define the fields for your item here like:

-

# name = scrapy.Field()

-

invse_des = scrapy.Field()

-

invse_title = scrapy.Field()

-

money = scrapy.Field()

-

name = scrapy.Field()

-

prov = scrapy.Field()

-

round = scrapy.Field()

-

invse_time = scrapy.Field()

-

city = scrapy.Field()

-

com_registered_name = scrapy.Field()

-

com_scope = scrapy.Field()

-

invse_company = scrapy.Field()

-

3.编写Spider:

-

import scrapy

-

from itjuzi.settings import JUZI_PWD, JUZI_USER

-

import json

-

-

-

class JuziSpider(scrapy.Spider):

-

name = ‘juzi’

-

allowed_domains = [‘itjuzi.com’]

-

-

def start_requests(self):

-

“””

-

先登录桔子网

-

“””

-

url = “https://www.itjuzi.com/api/authorizations”

-

payload = {“account”: JUZI_USER, “password”: JUZI_PWD}

-

# 提交json数据不能用scrapy.FormRequest,需要使用scrapy.Request,然后需要method、headers参数

-

yield scrapy.Request(url=url,

-

method=“POST”,

-

body=json.dumps(payload),

-

headers={‘Content-Type’: ‘application/json’},

-

callback=self.parse

-

)

-

-

def parse(self, response):

-

# 获取Authorization参数的值

-

token = json.loads(response.text)

-

url = “https://www.itjuzi.com/api/investevents”

-

payload = {

-

“pagetotal”: 0, “total”: 0, “per_page”: 20, “page”: 1, “type”: 1, “scope”: “”, “sub_scope”: “”,

-

“round”: [], “valuation”: [], “valuations”: “”, “ipo_platform”: “”, “equity_ratio”: [“”],

-

“status”: “”, “prov”: “”, “city”: [], “time”: [], “selected”: “”, “location”: “”, “currency”: [],

-

“keyword”: “”

-

}

-

yield scrapy.Request(url=url,

-

method=“POST”,

-

body=json.dumps(payload),

-

meta={‘token’: token},

-

# 把Authorization参数放到headers中

-

headers={‘Content-Type’: ‘application/json’, ‘Authorization’: token[‘data’][‘token’]},

-

callback=self.parse_info

-

)

-

def parse_info(self, response):

-

# 获取传递的Authorization参数的值

-

token = response.meta[“token”]

-

# 获取总记录数

-

total = json.loads(response.text)[“data”][“page”][“total”]

-

# 因为每页20条数据,所以可以算出一共有多少页

-

if type(int(total)/20) is not int:

-

page = int(int(total)/20)+1

-

else:

-

page = int(total)/20

-

-

url = “https://www.itjuzi.com/api/investevents”

-

for i in range(1,page+1):

-

payload = {

-

“pagetotal”: total, “total”: 0, “per_page”: 20, “page”:i , “type”: 1, “scope”: “”, “sub_scope”: “”,

-

“round”: [], “valuation”: [], “valuations”: “”, “ipo_platform”: “”, “equity_ratio”: [“”],

-

“status”: “”, “prov”: “”, “city”: [], “time”: [], “selected”: “”, “location”: “”, “currency”: [],

-

“keyword”: “”

-

}

-

yield scrapy.Request(url=url,

-

method=“POST”,

-

body=json.dumps(payload),

-

headers={‘Content-Type’: ‘application/json’, ‘Authorization’: token[‘data’][‘token’]},

-

callback=self.parse_detail

-

)

-

-

-

def parse_detail(self, response):

-

infos = json.loads(response.text)[“data”][“data”]

-

for i in infos:

-

item = ItjuziItem()

-

item[“invse_des”] = i[“invse_des”]

-

item[“com_des”] = i[“com_des”]

-

item[“invse_title”] = i[“invse_title”]

-

item[“money”] = i[“money”]

-

item[“com_name”] = i[“name”]

-

item[“prov”] = i[“prov”]

-

item[“round”] = i[“round”]

-

item[“invse_time”] = str(i[“year”])+“-“+str(i[“year”])+“-“+str(i[“day”])

-

item[“city”] = i[“city”]

-

item[“com_registered_name”] = i[“com_registered_name”]

-

item[“com_scope”] = i[“com_scope”]

-

invse_company = []

-

for j in i[“investor”]:

-

invse_company.append(j[“name”])

-

item[“invse_company”] = “,”.join(invse_company)

-

yield item

4.编写PIPELINE:

-

from itjuzi.settings import DATABASE_DB, DATABASE_HOST, DATABASE_PORT, DATABASE_PWD, DATABASE_USER

-

import pymysql

-

-

class ItjuziPipeline(object):

-

def __init__(self):

-

host = DATABASE_HOST

-

port = DATABASE_PORT

-

user = DATABASE_USER

-

passwd = DATABASE_PWD

-

db = DATABASE_DB

-

try:

-

self.conn = pymysql.Connect(host=host, port=port, user=user, passwd=passwd, db=db, charset=‘utf8’)

-

except Exception as e:

-

print(“连接数据库出错,错误原因%s”%e)

-

self.cur = self.conn.cursor()

-

-

def process_item(self, item, spider):

-

params = [item[‘com_name’], item[‘com_registered_name’], item[‘com_des’], item[‘com_scope’],

-

item[‘prov’], item[‘city’], item[’round’], item[‘money’], item[‘invse_company’],item[‘invse_des’],item[‘invse_time’],item[‘invse_title’]]

-

try:

-

com = self.cur.execute(

-

‘insert into juzi(com_name, com_registered_name, com_des, com_scope, prov, city, round, money, invse_company, invse_des, invse_time, invse_title)values (%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)’,params)

-

self.conn.commit()

-

except Exception as e:

-

print(“插入数据出错,错误原因%s” % e)

-

return item

-

-

def close_spider(self, spider):

-

self.cur.close()

-

self.conn.close()

-

5.编写settings.py

-

# -*- coding: utf-8 -*-

-

-

# Scrapy settings for itjuzi project

-

#

-

# For simplicity, this file contains only settings considered important or

-

# commonly used. You can find more settings consulting the documentation:

-

#

-

# https://doc.scrapy.org/en/latest/topics/settings.html

-

# https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

-

# https://doc.scrapy.org/en/latest/topics/spider-middleware.html

-

-

BOT_NAME = ‘itjuzi’

-

-

SPIDER_MODULES = [‘itjuzi.spiders’]

-

NEWSPIDER_MODULE = ‘itjuzi.spiders’

-

-

-

# Crawl responsibly by identifying yourself (and your website) on the user-agent

-

#USER_AGENT = ‘itjuzi (+http://www.yourdomain.com)’

-

-

# Obey robots.txt rules

-

ROBOTSTXT_OBEY = False

-

-

# Configure maximum concurrent requests performed by Scrapy (default: 16)

-

#CONCURRENT_REQUESTS = 32

-

-

# Configure a delay for requests for the same website (default: 0)

-

# See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay

-

# See also autothrottle settings and docs

-

DOWNLOAD_DELAY = 0.25

-

# The download delay setting will honor only one of:

-

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

-

#CONCURRENT_REQUESTS_PER_IP = 16

-

-

# Disable cookies (enabled by default)

-

#COOKIES_ENABLED = False

-

-

# Disable Telnet Console (enabled by default)

-

#TELNETCONSOLE_ENABLED = False

-

-

# Override the default request headers:

-

#DEFAULT_REQUEST_HEADERS = {

-

# ‘Accept’: ‘text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8’,

-

# ‘Accept-Language’: ‘en’,

-

#}

-

-

# Enable or disable spider middlewares

-

# See https://doc.scrapy.org/en/latest/topics/spider-middleware.html

-

#SPIDER_MIDDLEWARES = {

-

# ‘itjuzi.middlewares.ItjuziSpiderMiddleware’: 543,

-

#}

-

-

# Enable or disable downloader middlewares

-

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html

-

DOWNLOADER_MIDDLEWARES = {

-

# ‘itjuzi.middlewares.ItjuziDownloaderMiddleware’: 543,

-

‘itjuzi.middlewares.RandomUserAgent’: 102,

-

‘itjuzi.middlewares.RandomProxy’: 103,

-

}

-

-

# Enable or disable extensions

-

# See https://doc.scrapy.org/en/latest/topics/extensions.html

-

#EXTENSIONS = {

-

# ‘scrapy.extensions.telnet.TelnetConsole’: None,

-

#}

-

-

# Configure item pipelines

-

# See https://doc.scrapy.org/en/latest/topics/item-pipeline.html

-

ITEM_PIPELINES = {

-

‘itjuzi.pipelines.ItjuziPipeline’: 100,

-

}

-

-

# Enable and configure the AutoThrottle extension (disabled by default)

-

# See https://doc.scrapy.org/en/latest/topics/autothrottle.html

-

#AUTOTHROTTLE_ENABLED = True

-

# The initial download delay

-

#AUTOTHROTTLE_START_DELAY = 5

-

# The maximum download delay to be set in case of high latencies

-

#AUTOTHROTTLE_MAX_DELAY = 60

-

# The average number of requests Scrapy should be sending in parallel to

-

# each remote server

-

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

-

# Enable showing throttling stats for every response received:

-

#AUTOTHROTTLE_DEBUG = False

-

-

# Enable and configure HTTP caching (disabled by default)

-

# See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

-

#HTTPCACHE_ENABLED = True

-

#HTTPCACHE_EXPIRATION_SECS = 0

-

#HTTPCACHE_DIR = ‘httpcache’

-

#HTTPCACHE_IGNORE_HTTP_CODES = []

-

#HTTPCACHE_STORAGE = ‘scrapy.extensions.httpcache.FilesystemCacheStorage’

-

JUZI_USER = “1871111111111”

-

JUZI_PWD = “123456789”

-

-

DATABASE_HOST = ‘数据库ip’

-

DATABASE_PORT = 3306

-

DATABASE_USER = ‘数据库用户名’

-

DATABASE_PWD = ‘数据库密码’

-

DATABASE_DB = ‘数据表’

-

-

USER_AGENTS = [

-

“Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 2.0.50727; Media Center PC 6.0)”,

-

“Mozilla/5.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 1.0.3705; .NET CLR 1.1.4322)”,

-

“Mozilla/4.0 (compatible; MSIE 7.0b; Windows NT 5.2; .NET CLR 1.1.4322; .NET CLR 2.0.50727; InfoPath.2; .NET CLR 3.0.04506.30)”,

-

“Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN) AppleWebKit/523.15 (KHTML, like Gecko, Safari/419.3) Arora/0.3 (Change: 287 c9dfb30)”,

-

“Mozilla/5.0 (X11; U; Linux; en-US) AppleWebKit/527+ (KHTML, like Gecko, Safari/419.3) Arora/0.6”,

-

“Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.2pre) Gecko/20070215 K-Ninja/2.1.1”,

-

“Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN; rv:1.9) Gecko/20080705 Firefox/3.0 Kapiko/3.0”,

-

“Mozilla/5.0 (X11; Linux i686; U;) Gecko/20070322 Kazehakase/0.4.5”,

-

“Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/62.0.3202.29 Safari/537.36”

-

]

-

-

PROXIES = [

-

{‘ip_port’: ‘代理ip:代理IP端口’, ‘user_passwd’: ‘代理ip用户名:代理ip密码’},

-

{‘ip_port’: ‘代理ip:代理IP端口’, ‘user_passwd’: ‘代理ip用户名:代理ip密码’},

-

{‘ip_port’: ‘代理ip:代理IP端口’, ‘user_passwd’: ‘代理ip用户名:代理ip密码’},

-

]

-

6.让项目跑起来:

E:\>scrapy crawl juzi

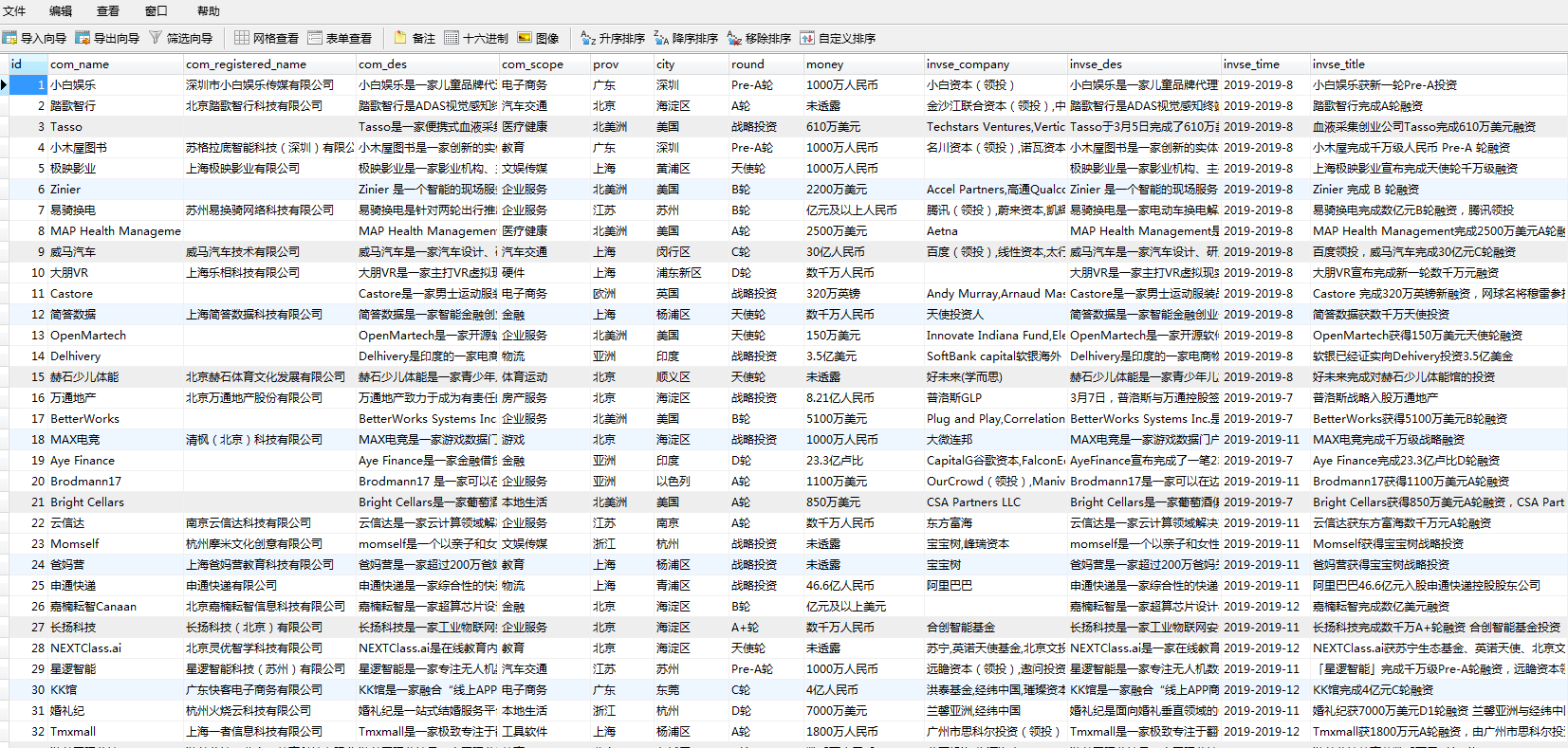

7.结果展示:

PS:详情信息这里没有爬取,详情信息主要是根据上面列表页返回的json数据中每个公司的id来爬取,详情页的数据可以不用登录就能拿到如:https://www.itjuzi.com/api/investevents/10262327,https://www.itjuzi.com/api/get_investevent_down/10262327,还有最重要的一点是如果你的账号不是vip会员的话只能爬取前3页数据这个有点坑,其他的信息模块也是一样的分析方法,需要的可以自己去分析爬取。

https://blog.csdn.net/sandorn/article/details/104284233